Face’s like a conflict field. Art versus recognition algorithms

Today, facial recognition is becoming an integral part of the digital infrastructure, from surveillance cameras to social media filters. A person ceases to be only in this way: it becomes a biometric code, a data unit, a means of identification. This changes not only the attitude towards privacy, but also the perception of visual identity itself. Attention to these changes is increasingly reflected in artistic and design practices: through distortion, disguise, or direct interference with algorithmic perception.

The visual resistance to which the study relates is not limited to declarations; it is manifest in form. Artists develop makeup that confuses recognition, creates masks and digital patterns, and transmits an algorithmic look to the space of installation and performance. In these projects, a person becomes not just a topic, but a surface for action, an error in the system, an area of impossible recognition.

The study is based on visual material showing different approaches to dealing with the face, ranging from documentary images and screen shots to dramas, videos and clothing objects. All examples are selected in view of their visual uniqueness, so that each section opens up a new perspective. The first step is to look at the principles of algorithms and how they change the way people look at people. The focus is further shifted to artistic intervention: a person turned into camouflage or distortion. Selected chapters analyse design solutions that create «invisible» and initial work where surveillance becomes part of the action. The final question is why visual resistance is now becoming one of the key instruments for discussing power and identity.

Leo Selvajo, masked men at the Modern Photo Museum, Chicago, 2020.

The analysis is based not only on visual observations, but also on texts related to observation theory, digital media and modern visual culture. The authors' own statements — how they formulate the objectives of their projects, describe their personal motives or interact with the audience — are also taken into account. By comparing images, contexts, and artistic gestures, there is a general shift in the understanding of the person, from an open image to a space of conflict, intervention, and refusal to be recognized.

The key issue in the study is how visual practices interfere with recognition systems and how they create an alternative perception of visibility, as well as the rights of their own person in the digital age.

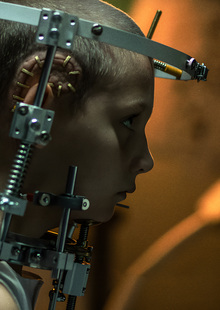

(left-right) Zach Blas / Elle Mehrmand / micha cárdenas / Paul Mpagi Sepuya, installation and performance of Face Cages, 2015.

1. How machine vision works and what it does to face

Today’s technology sees the face not as a portrait, but as a structure. Machine vision defines key points — eyes, nose, chin lines — and turns them into a numerical pattern. This person is no longer an individual, but a vector that can be compared to millions of others in the database [3].

Google Vision API facial recognition tool

Algorithms are used in various fields: street surveillance, phone unlocking, marketing systems, police. At the same time, the process itself remains invisible — a person does not always know that he has already been recognized. There’s a new feeling about being seen not by someone, but by something.

«Engine learning systems are trained daily on such images — images taken from the Internet or public institutions without context or consent» by Kate Crawford. Atlas of Artificial Intelligence, p. 89 (2023) [4]

Algorithm errors are also significant. Systems are more likely to be wrong if the person is not white, not male, not average statistics. It’s not just bugs, it’s a reflection of built-in bias: models train on limited samples and reproduce social hierarchy [5].

Study of seven methods for thermal mapping of the face, 2013

Identification of faces is not only about identification, but also about power. It turns a face into a pass, an access filter, a statistical element. A person formerly associated with identity now becomes a digital identifier — out of context, out of human will. It is this attitude toward a person — as an object of analysis — that becomes the point of entry for artistic intervention. It is responded to by artists in an effort to destroy reading, make a failure, make a face unaffordable.

2. All right, all right, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay. Artistic strategies: face as a field of intervention

In response to machine reading, the person becomes a distortion area rather than a way. Visual resistance manifests itself in the fact that the usual elements of appearance — eyes, eyebrows, cheekbones — deliberately break, hide, and distort. Artists use makeup, masks, glitches, and even random shapes to get out of the field of the algorithm. It’s not just visual style, it’s a way to disappear.

CV Dazzle is a face camouflage.

Adam Harvey’s project [6] was one of the first loud attempts to «smack» the recognition algorithm. He proposes the use of non-standard hair and makeup — bright lines that overlap key points, asymmetrics, shiny or math fragments. This image confuses the algorithms by disrupting the predictable facial geometry.

Adam Harvey, CV Dazzle Lock 5, 2013.

The name itself refers to the reference to «Computer Vision» and «dazze Camouflage» [7], a cloaking technique used on warships in the 20th century [8]. Instead of hiding an object, the artist seeks to confuse the observer by visual distortions, sharp contrasts and fragmentation of the shape. This approach is being digitized, with a person becoming a ship and an observer becoming a machine.

On the left is Adam Harvey, a collection of CV Dazzle Look 1-4 in 2010; on the right is camouflage on 20th-century warships.

CV Dazzle uses algorithm weaknesses: symmetry, contours, and shade distribution. One of the principles of the project is the decentralization of attention: the algorithm becomes difficult to determine where the face begins and ends.

That’s why there’s a non-standard jaw that closes your eyes and a geometric makeup that destroys the cheekbone line. Here, makeup is not an adornment, but an active technology to avoid recognition.

Car face recognition results: top row — common face, middle row — makeup, bottom row — makeup and bang, 2010.

Financial Weatonization Suite

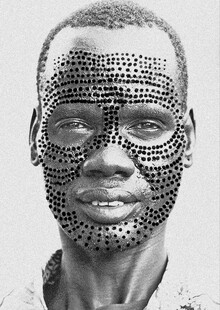

The artist Zach Blas [9] went a different way. His masks are collective faces: average 3D scans collected from a multitude of people. They are not individual; on the contrary, they are as common as possible. Their task is to hide a particular person behind a common form.

Zach Blas, view of «Difference Machines», Chicago, Alphawood Exibitions, 2023.

The project is directed against the very logic of biometrics, which believes that it is possible to extract digital truth from any body — fixed data available to machine analysis. Instead, the artist offers bodies and persons that cannot be considered: too collective, too anonymous, too weird for the algorithm. The mask, then, is not a defense, but an instrument for attacking the system.

Examples of the use of masks designed by Zach Blasom, photos 2013-2014.

«I tried to show the political implications of the abstraction of the so-called body truth through digital data. Biometry is abstract violence» — Zach Blas, an interview with Philippo Lorenzin (2020) [10]

Such a mask is not only a defence but also an assertion: a person is not obliged to read. It can be an instrument of protest, disorientation, collective identity. It’s an attempt to take away the power to see from the algorithm.

Cloud Face — When an algorithm sees something that isn’t

The project Shinseungback Kimyonghun [11] shows how illegible algorithms are. The system is looking for faces in the clouds. The artists downloaded pictures of the sky, and the algorithm began to mark on them the persons that apparently weren’t there. It’s a visual malfunction in which the machine learns the form in abstract matter.

== sync, corrected by elderman == @elder_man

Humor, satirium, absurdity are important tools in this fight. Artists use algorithms against themselves to highlight their limitations.

Sky Face Detection Process, Cloud Face Project, 2012.

3. Invisible design: clothing, textiles and accessories

When a face becomes recognized, the body — read — and even walking can become a biometric sign, the question arises: Can clothing restore invisibility? In this part of the visual resistance, design — fashion, textiles, accessories — comes first. They work not to attract attention, but to create visual noise, false recognition, optical traps for machines.

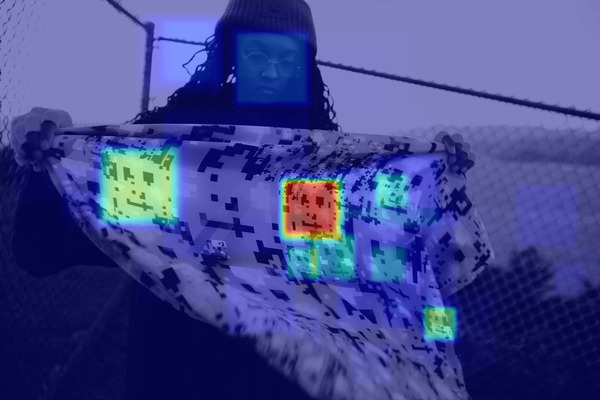

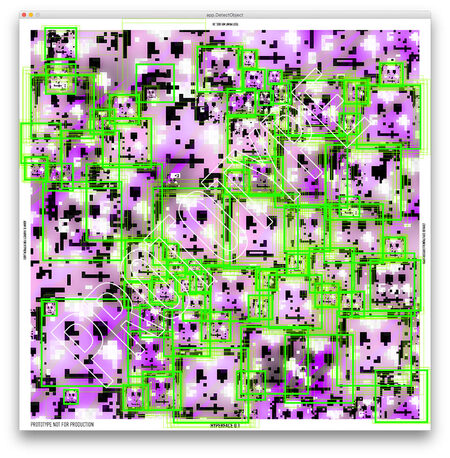

HyperFace — Delusional Clothing

The HyperFace [12] project, already created by Adam Harvey in collaboration with Hyphen-Labs, offers tissue patters filled with false faces. These faces don’t belong to anyone, but they’re visually reminiscent of the features that the algorithm usually looks for. As a result, the system is confused: it can’t understand where the real person is or where the real person is faked.

Pattern, designed by Adam Harvey to confuse facial recognition, 2017.

According to the author, HyperFace is not just about changing the appearance of a person, but about changing the context around him. " In camouflage you can think not only about the figure but also about the background, " Harvey says. — By changing what’s next to you, you can also change how computer vision works.» [12]

It’s important that these things don’t look like cloaks. They’re visually neutral, almost decorative. This makes them particularly effective in urban space: protection that doesn’t look like protection [13].

Examples of facial recognition on Adam Harvey’s tissue, 2017.

Privacy Visor is a non-observation glasse.

Another type of intervention is the accessories that disrupt the work of the cameras. Infrared light glasses or LEDs that reflect light make eyes — a key point of recognition — inaccessible to systems. For a human eye, glasses look normal, but for a camera, they become a blind spot [15].

Japan’s Privacy Visor glasses, which reflect the surrounding light in the camera lens, prototype 2013.

Some options are supplemented by simple light diodes, others by complex electronics that react to the camera approach. In both cases, it is not fashion per se, but a new type of technology veil — protective, but not militaristic.

4. All right, all right, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay. Performance and criticism of observation

When a face becomes a readable surface and clothing is camouflage, the next step is to turn behavior itself into an instrument of resistance. Performance allows this to be done literally: observation becomes a stage, and the system becomes a participant. Artists use the live-action format to reproduce, dissect and disrupt the algorithmic relationship between the body and the machine eye.

Lauren Lee McCarthy — Be a System

In the project SOMEONE [16], artist Lauren Lee McCarthy assumes the role of a digital platform. It integrates into the daily lives of other people and manages their home, their lighting, their calendar, even their sleeptime — as smart house algorithms do, but by hand. She’s looking through the cameras, talking to the microphones and sending out the notifications.

According to the artist, SOMEONE is a humanized version of home-grown intelligence for people like Alexa of Amazon [16].

Lauren Lee McCarthy, photos of the command center during the two-month SOMEONE series, 2019.

This project works on the brink of trust and invasion [17]. It shows how comfortable we have given control of our lives to algorithms, and how disturbing it becomes when a real person comes after them.

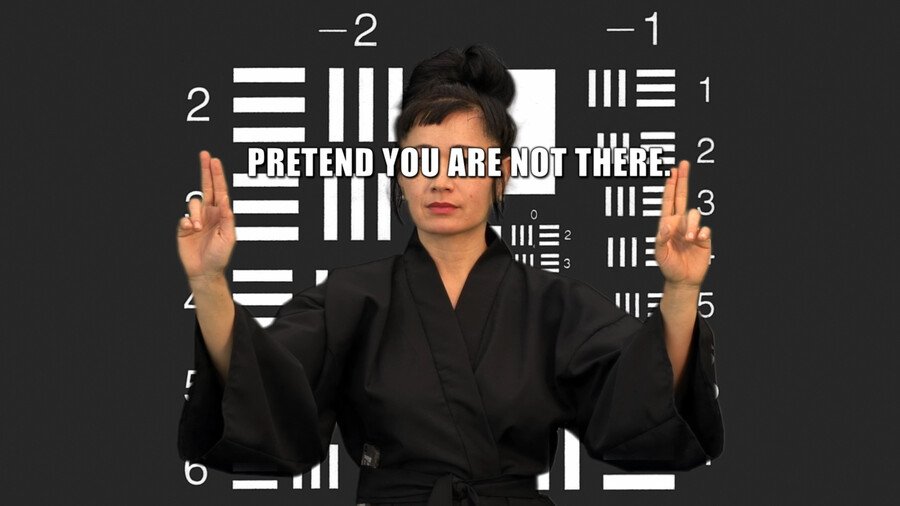

Hito Steyerl — How Not to Be Seen

In How Not to Be Seen [18], artist Hito Steyerl offers an ironic video instruction on «disappearance» in the era of total observation. In the spirit of the old teaching films, the voice behind the frame gives advice: «Don’t show up in public places,» «be too small to be seen,» «be hidden in pixels.» The video connects humor, criticism, and visual failure, turning an attempt to hide into an aesthetic gesture.

Hito Steyerle, photos from the video How not to be sein, 2013.

It is not just a manual for leaving the frame, but a reflection on how visibility is regulated — by whom, why, and in whose interest.

Leo Selvaggio is a mask for everyone.

In the URME Surveying project [1], painter Leo Celvejo creates hyperrealistic masks with his own face. He invites everyone who wants to «example» his face — literally, physically — and thus make it difficult for the system to identify. It’s an act of radical generosity where a person is not sharing property, but a person’s face.

Leo Selvajo, masked URME Survey, 2014.

This mask is not a defense, it’s an intervention. It creates a situation in which the same person, recognized by the system, is found in different places at the same time. Such an effect destroys the basic assumption of biometrics — that every human being is unique and traceable. This mask is now available on an official website: the 3D model will cost $200 [19] and the artist offers to download his face free of charge in order to make his own mask out of paper or paper [20].

Visitors to the Big Brother Awards, masked by guest Leo Selvajo, 2016.

5. I’m not sure what I’m talking about. Why is it important: art versus algorithms

When algorithms become intermediaries between the body and the world, visual resistance becomes particularly important. It does not merely «paint» the protest, but offers another way of living in a digital control environment. And here art becomes not a secondary, but a central tool for criticism and rethinking. The projects considered in the study combine a general desire to undermine the stability of the biometric view. This is done in different ways: facial distortion, algorithm failure, behavioral change or environment. But the point is that a person’s right to be unread, to be mistaken, to be confused is restored.

Sixth biometric tribulations, social action, Mexico, 2014.

The face in these projects is not an image, but an interface of power. It is counted, recorded, interpreted — and, through it, subject. Artistic practices hack this interface by turning a face into a mask, camouflage, noise, collective form, or a standing gesture. In this sense, they do not simply «do not allow the system to work», but they show that the system sees in its own way — a vision that can be called into question.

Adam Harvey, CV Dazzle Locks 6 and 7, 2020.

Such aesthetics are the aesthetics of glitch, failure, disappearance. It’s an attempt not to get out of sight, but to rewrite his terms. It’s not a disguise to slip away, but a new form of presence is not accessible to the algorithm, but relevant in a cultural and political context.

Conclusion

Today, the face is not just a portrait or an object of the image. It’s a field of conflict between man and algorithm, between the desire to be seen and the right to be hidden. Visual resistance — from makeup to digital patterns — becomes a way to regain control [21] over how and by whom a person is seen.

Adam Harvey, posters for the Think Privacy campaign, 2016 and 2018.

Art in this struggle not only shapes a new aesthetics — it stands for the very possibility of being indistinct. Which means he’s alive.

Leonardo Selvaggio — Urme Surveillance. — URL: https://leoselvaggio.com/urmesurveillance (date of call: 20.05.2025). 2. All right, all right, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay. Sach Blas — Face Cages. — URL: https://zachblas.info/works/face-cages/ (date of call: 20.05.2025). 3. Google Codelabs — Making the Vision API with Python. URL: https://codelabs.developers.google.com/codelabs/cloud-vision-api-python#6 (date of call: 19.05.2025). 4. All right, all right, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay. DOKUMEN.PUB — Artificial Intelligence Atlas: A Guide to the Future 9785171485672 [Electric Resource]. — URL: https://dokumen.pub/978571485672.html (date of call: 19.05.2025). 5. I’m not sure what I’m talking about. 300 Faces In-The-Wild Challenge: Database and results. — URL: https://ibug.doc.ic.ac.uk/media/uploads/documents/sagonas_2016_imavis.pdf (date of call: 19.05.2025). 6. All right, all right, all right, all right, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay, okay. Adam Harvey — CV Dazzle [Electric Resource]. — URL: https://adam.harvey.studio/cvdazzle/ (Date of call: 20.05.2025). 7. Wikipedia — Computer Vision dazzle. — URL: https://en.wikipedia.org/wiki/Computer_vision_dazzle (date of call: 19.05.2025). 8. I’m sorry, I’m sorry. I’m sorry. Wikipedia — Dazzle Camouflage. — URL: https://en.wikipedia.org/wiki/Dazze_camouflage (date of call: 19.05.2025). 9. Sach Blas — Financial Weaconization Suite. — URL: https://zachblas.info/works/financial-weaconization-site/ (date of call: 20.05.2025). 10. Digital Art, Design and Culture — Mediated cages. Interview with Sach Blas. — URL: https://digicult.it/articles/mediad-cages-interview-with-zach-blas/#: ~:text=The %20review%20work%20on0biometrics, as %20digital %2data%20 from %20broads (date of call: 21.05.2025). 11. Shinseungback Kimyonghun — Cloud Face. — URL: https://ssbkyh.com/works/cloud_face/ (date of call: 19.05.2025). 12. Adam Harvey — HyperFace. — URL: https://adam.harvey.studio/hyperface/ (date of call: 20.05.2025). 13. I’m sorry, I’m sorry. The Guardian — Anti-surveillance cluthing imms to hede weirders from financial recognition ♪ Financial recognition [Electronic resource]. — URL: https://www.theguardian.com/technology/2017/jan/04/anti-surveillance-clothing-fatal-recognition-hyperface (date of address: 20.05.2025). 14. The Independent — Anti-Surveillance, which has been set up to combat actual recognition technology. — URL: https://www.independent.co.uk/news/science/anti-surveillance-clothing-fatal-recognition-technology-hyperface-adam-harvey-berlin-facebook-apple-government-a7511631.html (date of address: 20.05.2025). 15. All of the news in the world of computers and communications ♪ OSP News ♪ Open Systems Publication — Private Visor’s glasses «blind» in facial recognition systems. — URL: https://www.osp.ru/news/2015/0817/13029502 (date of call: 21.05.2025). 16. Lauren Lee — SOMEONE. — URL: https://lauren-mcarthy.com/SOMEONE (date of call: 20.05.2025). 17. Vimeo — SOMEONE documentation. — URL: https://vimeo.com/369952685 (date of call: 20.05.2025). 18. Artforum — Hito Steyerl, How Not to Be Sean: A Fucking Dynamic Educational .MOV File, 2013. — URL: https://www.artforum.com/video/hito-systemrl-how-not-to-be-seen-a-fucking-didac-education-mov-file 2013-165845/ (date of call: 20.05.2025). 19. URME Surveyance — URME Prosthetic [Electric Resource]. — URL: https://www.urmesurveillance.com/urme-prosthetic/ (date of call: 20.05.2025). 20. URME Surveyance — URME Paper Mask. — URL: https://www.urmesurveillance.com/urme-paper-mask/ (date of call: 20.05.2025). 21. Adam Harvey — Think Privacy [Electro resource]. — URL: https://adam.harvey.studio/think-privacy/ (date of call: 21.05.2025).